What Is Undersampling?

Undersampling is a technique to balance uneven datasets by keeping all of the data in the minority class and decreasing the size of the majority class. It is one of several techniques data scientists can use to extract more accurate information from originally imbalanced datasets. Though it has disadvantages, such as the loss of potentially important information, it remains a common and important skill for data scientists.

Undersampling vs. Oversampling for Imbalanced Datasets

Many organizations that collect data end up with imbalanced datasets with one section of the data, a class, having significantly more events than another. The difference between two or more classes is a class imbalance, and imbalanced classifications can be slight or severe. For example, the difference between two classes could be 3:1 or the difference could be 1,000:1. When there are numerous classes within a dataset, not just two, there can be considerable differences in the distribution among them.

Data scientists and machine learning models have a hard time gaining accurate information from imbalanced classes of data. An analysis of imbalanced datasets can lead to biased results, particularly in machine learning. Without figuring out an appropriate way to balance the information they have, data scientists may not gain much insight into the issue at hand or make actionable recommendations to their employers.

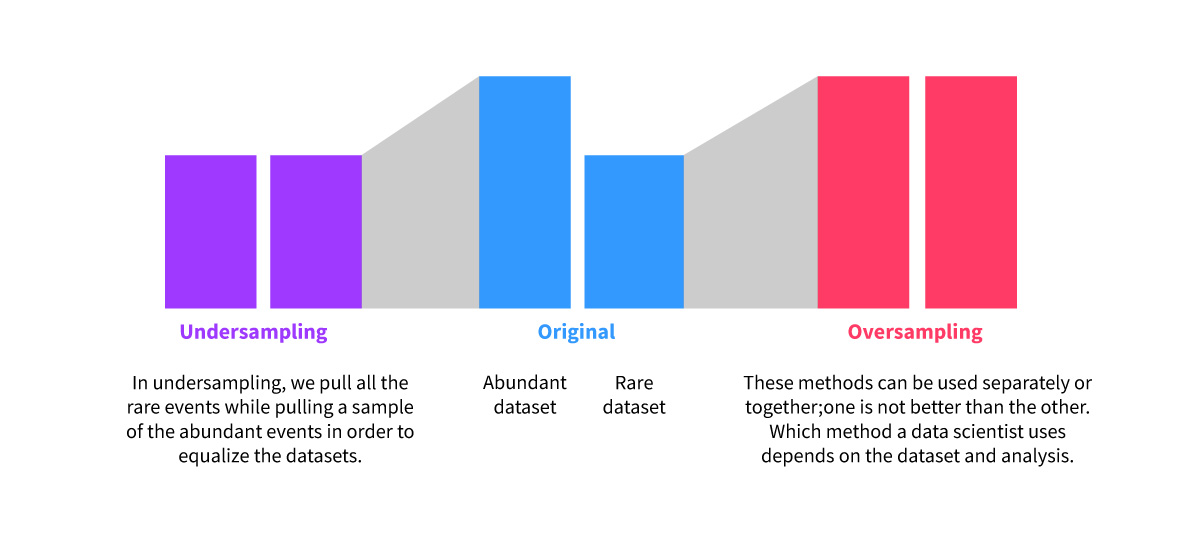

Two techniques data scientists can use to balance datasets are oversampling and undersampling.

Oversampling is appropriate when data scientists do not have enough information. One class is abundant, or the majority, and the other is rare, or the minority. In oversampling, the scientist increases the number of rare events. The scientist uses some type of technique to create artificial events. One technique to create artificial events is synthetic minority oversampling technique (SMOTE).

Undersampling is appropriate when there is plenty of data for an accurate analysis. The data scientist uses all of the rare events but reduces the number of abundant events to create two equally sized classes. Typically, scientists randomly delete events in the majority class to end up with the same number of events as the minority class.

These methods can be used separately or together because one is not better than the other. Which method a data scientist uses depends on the dataset and analysis.

Undersampling Techniques

There are several undersampling techniques data scientists can use.

Random undersampling involves randomly deleting events from the majority class. Instead of getting rid of events at random, data scientists can use some type of reasoning for keeping versus getting rid of some data.

There are undersampling methods that are focused on which events the data scientists should keep.

Data scientists can use one of three near-miss undersampling techniques. In the first technique, they keep events from the majority class that have the smallest average distance to the three closest events from the minority class on a scatter plot. In the second, they use events from the majority class that have the smallest average distance to the three furthest events from the minority class on a scatter plot. In the third, they keep a given number of majority class events for each event in the minority class that are closest on the scatter plot.

Some situations call for condensed nearest neighbors (CNN) undersampling. This technique uses a subset of events. The scientist takes the events of a dataset and adds them to the “store” if the events cannot be classified correctly based on the current contents of the store. The store includes all of the events in the minority class and only events from the majority class that cannot be classified correctly.

Other methods are focused on which events to delete.

The Tomek Links method, created by Ivan Tomek in 1976, applies two modifications to CNN. One modification is finding events in pairs, one event from the majority class and the other from the minority class, which have the smallest distance from each other on a scatter plot. These are Tomek Links, and they are known as events on the borderline or noise. This method does not get rid of much of the majority class, which is why it is often combined with other techniques.

Another method is edited nearest neighbors (ENN). In this technique, scientists look at the three nearest neighbors to each event on a scatter plot. When an event is part of the majority class and is misclassified based on its three nearest neighbors, it is removed. When an event is part of the minority class and it is misclassified based on its three nearest neighbors, then its nearest neighbors in the majority class are removed. This technique also can be combined with other undersampling methods.

Data scientists do not have to rely on one of these methods alone, and many scientists have explored combinations of these techniques.

One-sided selection (OSS) combines Tomeks Links and CNN. Data scientists first identify and remove Tomeks Links on the class boundary of the majority class. This removes noisy and borderline majority events. Then they use CNN to delete redundant events from the majority class that are not near the decision boundary.

Neighborhood cleaning rule (NCR) combines CNN and ENN. A data scientist removes duplicate events through CNN. Then, they remove noisy or ambiguous events through ENN. This technique is considered to be less concerned with balancing the two classes but leaving behind the highest quality data.

Example of Undersampling in Machine Learning

Consider fraud detection in banking. The banking events, like debit or credit card transactions or money transfers, are predominantly valid. Only a small percentage of events are fraudulent, which leads to an imbalanced dataset. For the bank or credit card company’s machine learning algorithm to draw conclusions about fraudulent transactions, they have to account for this difference. Failing to resample the imbalanced classes would lead algorithms to skew toward the majority. Any conclusions the business draws from the imbalanced dataset are a waste of time, and any actions it takes based on the analysis will not be effective.

If an organization is trying to get better at identifying fraudulent events, imbalanced data could skew it toward wrongly labeling transactions. The business would end up with many false negatives (a transaction labeled lawful when it is fraudulent) and false positives (a transaction deemed fraudulent when it is valid). Both issues are a problem for the bank or credit card company’s customers and could impact its bottom line.

For banks or credit card companies to gain real insight from imbalanced datasets, they use resampling. Undersampling is one resampling method, which can be used alone or in conjunction with oversampling. Through an undersampling technique, businesses remove certain events from the majority class, which is made up of the non-fraudulent transactions. The goal is to create a balanced dataset that reflects the real world and can most accurately detect fraudulent transactions.

The benefit of using machine learning algorithms to detect banking or credit card fraud is that over time, machine learning recognizes new patterns. The models can adapt, which is particularly important as individuals develop new ways to defraud others. But for a business’s machine learning to become more accurate over time, it must have a strong foundation of a balanced dataset. Any machine learning algorithm is only as good as its data, and imbalanced data will inevitably lead to inaccurate results.

Undersampling Advantages and Disadvantages

The main advantage of undersampling is that data scientists can correct imbalanced data to reduce the risk of their analysis or machine learning algorithm skewing toward the majority. Without resampling, scientists might run a classification model with 90% accuracy. On closer inspection, though, they will find the results are heavily within the majority class. This is known as the accuracy paradox.

Simply put, the minority events are harder to predict because there are fewer of them. An algorithm has less information to learn from. But resampling through undersampling can correct this issue and make the minority class equal to the majority class for the purpose of the data analysis.

Other advantages of undersampling include less storage requirements and better run times for analyses. Less data means businesses need less storage and time to gain valuable insights.

During undersampling, data scientists or the machine learning algorithm removes data from the majority class. Because of this, scientists can lose potentially important information. Think about the difference in the amount of data in the majority vs. minority classes. The ratio could be 500:1, 1,000:1, 100,000:1, or 1,000,000:1. Removing enough majority events to make the majority class the same or a similar size to the minority class results in a significant loss of data.

Loss of potentially important data is particularly true with random undersampling when events are removed without any consideration for what they are and how useful they might be to the analysis. Data scientists may address this disadvantage by using a thoughtful and informative undersampling technique. They also may combat the loss of potentially important data by combining undersampling and oversampling techniques. This way, they do not just reduce the majority class but also increase the minority class to reach a balanced dataset.

Another disadvantage of undersampling is that the sample of the majority class chosen could be biased. The sample might not accurately represent the real world, and the result of the analysis may be inaccurate.

Because of these disadvantages, some scientists might prefer oversampling. It doesn’t lead to any loss of information, and in some cases, may perform better than undersampling. But oversampling isn’t perfect either. Because oversampling often involves replicating minority events, it can lead to overfitting. To balance these issues, certain scenarios might require a combination of both to obtain the most lifelike dataset and accurate results.

Conclusion

Undersampling is a useful way to balance imbalanced datasets. But it is imperative for data scientists to consider the information potentially lost through undersampling. Developing a dataset that behaves like the natural world takes time and care. Data scientists consider the various undersampling techniques as well as combination undersampling and oversampling methods. They experiment with these methods to determine the most effective way to resample their data and obtain the most accurate results for their employers.

Data science and machine learning are growing fields. According to O*NET OnLine, employment for data scientists is expected to increase 15% or more between 2020 and 2030. The 2020 median pay for computer and information research scientists was $98,230 per year.

Data scientists work in a variety of industries and locations. If you’re interested in becoming a data scientist, you might want to consider researching data science bootcamp or data science master’s degree to find the best educational path for you.

This page includes information from O*NET OnLine by the U.S. Department of Labor, Employment and Training Administration (USDOL/ETA). Used under the CC BY 4.0 license. O*NET® is a trademark of USDOL/ETA.

Last updated: April 2022